Local LLMs: Setup Guide for Desktop

If you're a holder of a Triplo AI PRO license or subscription, you can now use Ollama or LMStudio to serve models to your Triplo AI. Please note that the Ollama integration is still in BETA, so expect changes over time. Follow the steps below to set it up.

Step 1: Install Ollama or LMStudio on Your Computer/Server

Ollama Windows Installation

Download the executable file from the Ollama website.

Run the downloaded executable file.

Ollama will be installed automatically.

Ollama MacOS Installation

Download the Ollama file from the Ollama website.

Once the download completes, unzip the downloaded file.

Drag the Ollama.app folder into your Applications folder.

Ollama Linux Installation

Open your terminal.

Run the following command: curl -fsSL https://ollama.com/install.sh | sh

Step 2: Select the Desired Models

Visit Ollama's library or LMStudio equivalent to browse and select the models you want to use.

If you're not sure what model to use, pick a small one (like phi3:3.8b or gemma:2b).

Step 3: Download/Install the Selected Models

Run the following command in your terminal to download and install the selected models: ollama run %model_id%

Example: ollama run deepseek-r1 OR ollama run mannix/phi3-mini-4k

Step 4: Quit Ollama

To quit Ollama, use the command: /bye

Step 5: Start the Ollama Service

Run the following command to start the Ollama service: ollama serve

You should see a return similar to the following:

Generating new private key. Your new public key is: ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIH8sTn5QJ3vRgXeMZQ68Whqj4uVfZlWnFJkz/aMrKQvError: listen tcp 127.0.0.1:11434Step 6: Copy the Address with the Port

In the example above, the address is 127.0.0.1:11434.

Make sure to ADD http:// to the beginning and copy this address, making it: http://127.0.0.1:11434

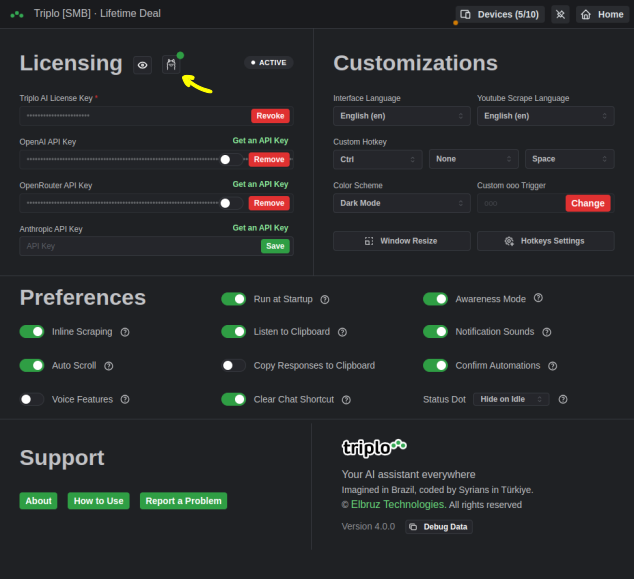

Step 7: Paste it into Your Triplo AI Ollama Settings

Paste the copied address (with http://) into your Triplo AI Ollama configuration settings to connect.

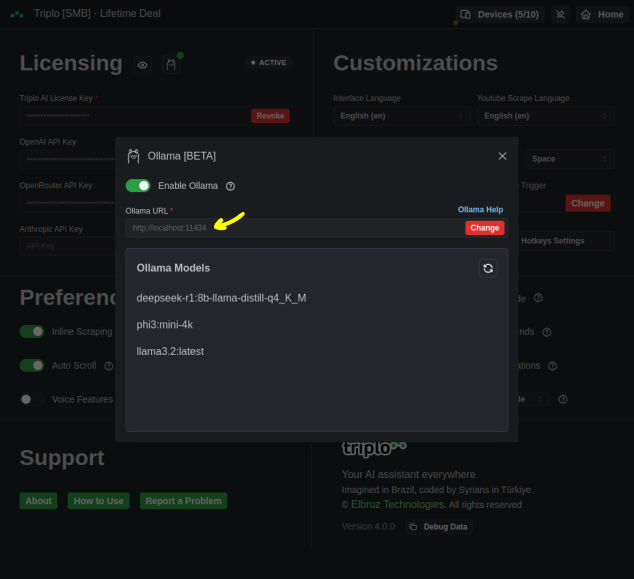

Step 8: Paste the path/port of your Ollama Server

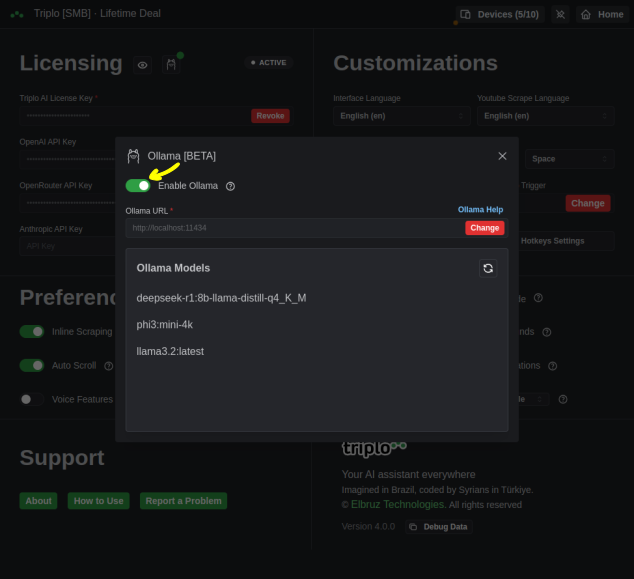

Step 9: Enable Your Ollama

You can now select all of the models available at your Ollama service directly in your Triplo AI.

This guide centers on Ollama, but you can achieve the same results with LMStudio. Simply follow its installation steps and activate the LMStudio Server. Once set up, enter the correct URL in your Triplo AI desktop client to access and prompt your local models through Triplo AI.

Supercharge Your Productivity with Triplo AI

Unlock the ultimate AI-powered productivity tool with Triplo AI, your all-in-one virtual assistant designed to streamline your daily tasks and boost efficiency. Triplo AI offers real-time assistance, content generation, smart prompts, and translations, making it the perfect solution for students, researchers, writers, and business professionals. Seamlessly integrate Triplo AI with your desktop or mobile device to generate emails, social media posts, code snippets, and more, all while breaking down language barriers with context-aware translations. Experience the future of productivity and transform your workflow with Triplo AI.

Try it risk-free today and see how it can save you time and effort.

Your AI assistant everywhere

Imagined in Brazil, coded by Syrians in Türkiye.

© Elbruz Technologies. All Rights reserved